Imagine this : you’ve just built a data pipeline that runs smoothely and efficiently.

Your logic is flawless.

Your notebooks are pristine.

You sit back, smugly enjoying your success, when your manager strolls over and says:

> “Great work! Now deploy it to staging. And production. Today.”

Your soul briefly exits your body. Because what this really means is: endless UI clicking, hunting for lost cluster configs, recreating jobs from memory, praying you didn’t forget that one permission setting that will break everything.

If only deploying pipelines wasn’t a high-stakes game of “Will It Break?”

Enter Databricks Asset Bundles—a highly structured and reliable way to ship your data projects..

Think of Asset Bundles as the packing cubes of Databricks. Just as you wouldn’t throw all your vacation clothes haphazardly into a suitcase, you shouldn’t toss notebooks, pipelines, and cluster configs randomly into production.

Asset Bundles give your project structure, predictability, and portability, so deployments behave exactly as intended—no surprises, no firefighting.

An Asset Bundle packages everything your project needs to run, including:

- Notebooks

- Workflows & Pipelines

- Libraries

- Cluster specifications

- Permissions and access controls

- Secrets

- Environment-specific overrides

- Deployment logic

All of this is declared in a single bundle.yml file, which acts as the canonical source of truth for your project. Think of it as the master blueprint that tells Databricks: “Here’s everything you need, exactly as it should be, for every environment.”

If your project were a person, Databricks would step in and say:

“I will ensure your project is structured, consistent, and ready to deploy across all environments.”

Structure. Discipline. Joy.

Deployments often fail not because the code is bad, but because environments are unpredictable. Even subtle differences between dev, staging, and production can cause pipelines to break: a missing library here, a cluster policy change there, or a secret scope that wasn’t set.

Asset Bundles solve this problem by:

Eliminating configuration drift: Every environment uses the same defined resources.

Reducing human error: Manual tweaks and “just this once” fixes are no longer necessary.

Improving reproducibility: Rollbacks, staging tests, and CI/CD integration become simple.

Increasing confidence: Engineers can deploy knowing that what works in dev will work in prod.

For full details, Databricks has documentation here: Databricks Asset Bundles Documentation.

Deployments rarely fail because the code itself is faulty. More often, they fail because the environment is unpredictable. Subtle differences between dev, staging, and production can silently introduce errors, slow performance, or outright failures.

Permissions missed: A pipeline may fail if a user or job lacks access to a cluster, notebook, or secret.

Wrong runtime used: Features available in one Spark version may be absent in another.

Secret scopes not set: Missing secrets can break jobs or prevent data access.

Cluster policies changed silently: Autoscaling, node types, or Spark configs may differ unexpectedly.

Job configs not replicated properly: Pipeline schedules, retries, or dependencies may be inconsistent.

Pipelines manually tweaked in one environment but not others: Small manual changes create unpredictable behavior.

Even a single overlooked setting can cascade into hours of debugging, late-night alerts, or production downtime.

- Dev environment: flawless

- Staging: slightly weird, but manageable

- Production: catastrophic

The culprit? A runtime mismatch: staging ran Spark 13.3, while production was still on 12.2. The pipeline used a feature exclusive to 13.3. Production failed immediately, triggering a flood of alerts at 2 AM and sending the on-call engineer to scramble at 2 AM.

This scenario is all too familiar. Even minor differences between environments - library versions, cluster configurations, or secret scopes - can silently break production pipelines.

Enter Databricks Asset Bundles. By packaging every notebook, pipeline, cluster config, library, permission, and secret into a single declarative bundle, they ensure:

Environment consistency: Dev, staging, and prod are identical by design.

Elimination of silent drift: No more “But it worked in dev!” moments.

Predictable deployments: Everything is defined once and deployed everywhere.

Reduced human error: Manual tweaks are unnecessary, and accidental differences are automatically corrected in the next deployment.

At the heart of every Asset Bundle lies the bundle.yml file -- It is small in size, but mighty in function. It is like the master blueprint for your entire Databricks project.

This declarative file ensures that every resource, dependency, and configuration is defined once and deployed consistently across all environments.

A well-structured bundle file can:

Define every resource in your project - pipelines, notebooks, jobs, workflows.

Specify deployment environments - dev, staging, production, or custom setups.

Store environment-specific overrides - runtime versions, secret scopes, cluster configurations.

Track dependencies and settings - libraries, permissions, secrets, cluster policies.

Essentially, it turns what was once a messy, error-prone manual process into a single source of truth.

Deployment is simple:

`databricks bundle deploy`

Databricks then ensures:

- Jobs appear in the correct workspace

- Pipelines deploy correctly

- Permissions are applied consistently

- Clusters match across all environments

Asset Bundles shine even more when integrated with version control and automated pipelines:

Git Integration: Every bundle version is tracked, auditable, and rollback-ready. Changes are transparent, and teams can confidently review, merge, or revert deployments.

CI/CD Automation: Bundles can be deployed automatically when code is merged into a branch, removing human error entirely.

Auditability & Compliance: Know exactly what was deployed, by whom, and when—ideal for regulated environments or large teams.

Tip: Treat your bundle repository like production code. Use branching, pull requests, and code reviews to ensure that every deployment is validated before it reaches production.

A team spent three days debugging why a pipeline consumed double the compute in production but ran smoothly in dev.

The culprit? A single missing library, installed manually in dev but never added to prod. Without the optimized dependency, the pipeline silently fell back to a slower execution path.

Asset Bundles prevent this: all libraries are declared, versioned, and deployed consistently, eliminating silent differences.

Another team ran into a runtime mismatch. Dev used Spark 13.3, prod had 12.2. A feature used in dev failed in production, triggering alerts at midnight.

With Asset Bundles, cluster runtime versions are part of the bundle, preventing such catastrophic drift.

Jobs break in production but work in dev: A pipeline runs perfectly in development but fails mysteriously in staging or prod. The cause? Anything from a missing library to a subtle runtime mismatch.

Cluster configurations and pipelines are inconsistent: Differences in Spark versions, autoscaling policies, or attached libraries create unpredictable behavior.

Permissions are a mystery: Access controls are scattered, undocumented, and prone to accidental changes.

Deployments feel like archaeology: Engineers spend hours digging through old jobs, pipelines, and configs left behind by previous team members—trying to piece together what was intended versus what actually exists.

Debugging is reactive: Issues are discovered only after failures, often during off-hours or critical production runs.

In short: deployments are stressful, error-prone, and exhausting.

One command, total synchronization: databricks bundle deploy ensures every job, pipeline, and notebook is deployed exactly as intended.

Identical pipelines across environments: Dev, staging, and prod are no longer different worlds—they are mirrors of each other.

No more mysterious breaks: Consistency across clusters, libraries, and configurations eliminates unpredictable failures.

Clean and reproducible environments: Every deployment is auditable and version-controlled. Teams can roll back, replicate, or audit setups at any time.

Self-healing deployments: Even if manual changes occur in one environment, the next deploy corrects them automatically.

Data Engineers: No more manual job recreation or guesswork

DevOps Teams: Embrace GitOps; environments match exactly

Architects: Consistency becomes guaranteed, not aspirational

Analysts & Managers: Fewer late-night emergencies, less stress

Asset Bundles convert tribal knowledge into documented, versioned truth, making deployments:

- Repeatable

- Dependable

- Debuggable

- Automated

This is how mature engineering teams operate—and why teams using Asset Bundles sleep better at night.

1. Define everything declaratively: Clusters, libraries, secrets, permissions. Treat the YAML bundle as your single source of truth.

2. Version control is your friend: Commit every bundle to Git. Tag releases for easy rollback.

3. Test in staging first: Even with Asset Bundles, always deploy to staging to validate everything.

4. Document overrides: If dev/staging/prod differ, explicitly define overrides. Never rely on memory.

5. Automate deployments: Integrate bundles into CI/CD pipelines to remove manual steps entirely.

Effortless deployments: What used to take hours of manual setup now happens with a single command.

No more hidden pitfalls: Configuration mismatches, missing libraries, and forgotten permissions are eliminated.

Consistent environments: Dev, staging, and production are aligned perfectly, every time.

Reproducible deployments: Every release can be rolled back or replicated reliably, without guesswork.

With Asset Bundles, your pipelines behave predictably, your deployments are stress-free, and your team can focus on building value rather than troubleshooting. Your future self—and your nights—will thank you.

Databricks Asset Bundles aren’t just a tool—they’re a strategic approach to shipping data projects reliably and efficiently. By defining everything declaratively, ensuring environment consistency, and integrating seamlessly with Git and CI/CD pipelines, Bundles remove the guesswork from deployments.

For data engineers, DevOps teams, and anyone responsible for production pipelines, Asset Bundles transform chaos into order, stress into confidence, and late-night firefighting into productive engineering time. Deploy once, deploy everywhere, and know it will just work.

Your pipelines deserve stability. Your team deserves sanity. And your deployments deserve Asset Bundles.

New engineers shouldn't learn Docker like they're defusing a bomb. Here's how we created a fear-free learning environment—and cut training time in half." (165 characters)

A complete beginner’s guide to data quality, covering key challenges, real-world examples, and best practices for building trustworthy data.

Explore the power of Databricks Lakehouse, Delta tables, and modern data engineering practices to build reliable, scalable, and high-quality data pipelines."

Ever wonder how Netflix instantly unlocks your account after payment, or how live sports scores update in real-time? It's all thanks to Change Data Capture (CDC). Instead of scanning entire databases repeatedly, CDC tracks exactly what changed. Learn how it works with real examples.

A real-world Terraform war story where a “simple” Azure SQL deployment spirals into seven hard-earned lessons, covering deprecated providers, breaking changes, hidden Azure policies, and why cloud tutorials age fast. A practical, honest read for anyone learning Infrastructure as Code the hard way.

From Excel to Interactive Dashboard: A hands-on journey building a dynamic pricing optimizer. I started with manual calculations in Excel to prove I understood the math, then automated the analysis with a Python pricing engine, and finally created an interactive Streamlit dashboard.

Data doesn’t wait - and neither should your insights. This blog breaks down streaming vs batch processing and shows, step by step, how to process real-time data using Azure Databricks.

A curious moment while shopping on Amazon turns into a deep dive into how Rufus, Amazon’s AI assistant, uses Generative AI, RAG, and semantic search to deliver real-time, accurate answers. This blog breaks down the architecture behind conversational commerce in a simple, story-driven way.

This blog talks about Databricks’ Unity Catalog upgrades -like Governed Tags, Automated Data Classification, and ABAC which make data governance smarter, faster, and more automated.

Tired of boring images? Meet the 'Jai & Veeru' of AI! See how combining Claude and Nano Banana Pro creates mind-blowing results for comics, diagrams, and more.

An honest, first-person account of learning dynamic pricing through hands-on Excel analysis. I tackled a real CPG problem : Should FreshJuice implement different prices for weekdays vs weekends across 30 retail stores?

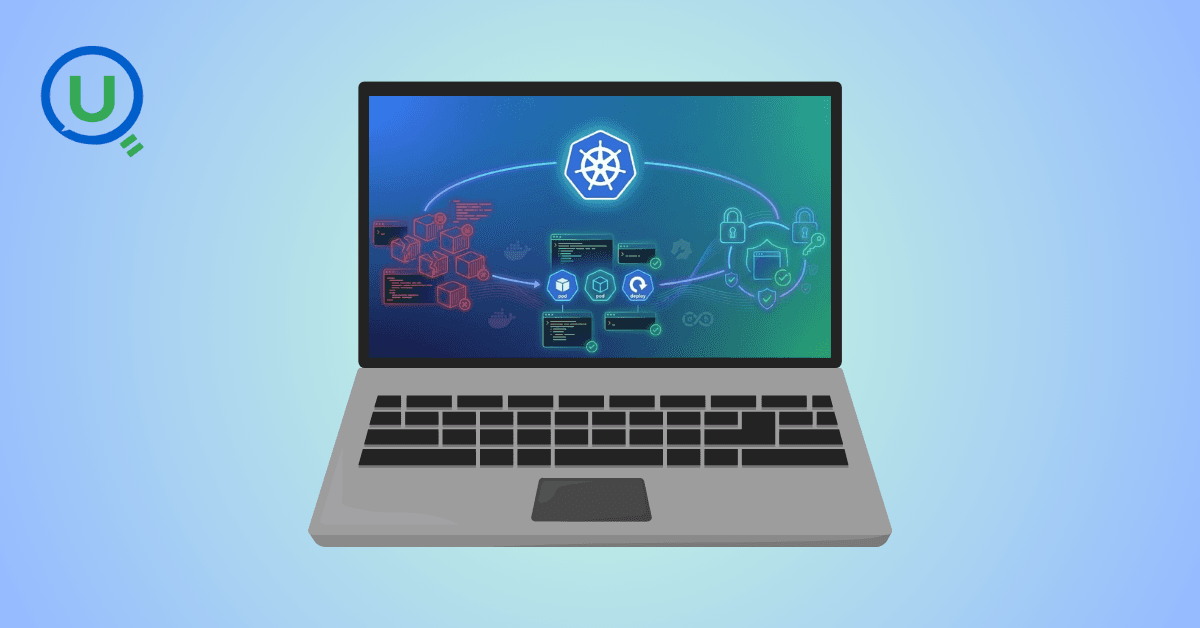

What I thought would be a simple RBAC implementation turned into a comprehensive lesson in Kubernetes deployment. Part 1: Fixing three critical deployment errors. Part 2: Implementing namespace-scoped RBAC security. Real terminal outputs and lessons learned included

This blog walks you through how Databricks Connect completely transforms PySpark development workflow by letting us run Databricks-backed Spark code directly from your local IDE. From setup to debugging to best practices this Blog covers it all.

This blog unpacks how brands like Amazon and Domino’s decide who gets which coupon and why. Learn how simple RFM metrics turn raw purchase data into smart, personalised loyalty offers.

Learn how Snowflake's Query Acceleration Service provides temporary compute bursts for heavy queries without upsizing. Per-second billing, automatic scaling.

A simple ETL job broke into a 5-hour Kubernetes DNS nightmare. This blog walks through the symptoms, the chase, and the surprisingly simple fix.

A data engineer started a large cluster for a short task and couldn’t stop it due to limited permissions, leaving it idle and causing unnecessary cloud costs. This highlights the need for proper access control and auto-termination.

Tracking sensitive data across Snowflake gets overwhelming fast. Learn how object tagging solved my data governance challenges with automated masking, instant PII discovery, and effortless scaling. From manual spreadsheets to systematic control. A practical guide for data professionals.

My first hand experience learning the essential concepts of Dynamic pricing

Running data quality checks on retail sales distribution data

This blog explores my experience with cleaning datasets during the process of performing EDA for analyzing whether geographical attributes impact sales of beverages

Snowflake recommends 100–250 MB files for optimal loading, but why? What happens when you load one large file versus splitting it into smaller chunks? I tested this with real data, and the results were surprising. Click to discover how this simple change can drastically improve loading performance.

Master the bronze layer foundation of medallion architecture with COPY INTO - the command that handles incremental ingestion and schema evolution automatically. No more duplicate data, no more broken pipelines when new columns arrive. Your complete guide to production-ready raw data ingestion

Learn Git and GitHub step by step with this complete guide. From Git basics to branching, merging, push, pull, and resolving merge conflicts—this tutorial helps beginners and developers collaborate like pros.

Discover how data management, governance, and security work together—just like your favorite food delivery app. Learn why these three pillars turn raw data into trusted insights, ensuring trust, compliance, and business growth.

Beginner’s journey in AWS Data Engineering—building a retail data pipeline with S3, Glue, and Athena. Key lessons on permissions, data lakes, and data quality. A hands-on guide for tackling real-world retail datasets.

A simple request to automate Google feedback forms turned into a technical adventure. From API roadblocks to a smart Google Apps Script pivot, discover how we built a seamless system that cut form creation time from 20 minutes to just 2.

Step-by-step journey of setting up end-to-end AKS monitoring with dashboards, alerts, workbooks, and real-world validations on Azure Kubernetes Service.

My learning experience tracing how an app works when browser is refreshed

Demonstrates the power of AI assisted development to build an end-to-end application grounds up

A hands-on learning journey of building a login and sign-up system from scratch using React, Node.js, Express, and PostgreSQL. Covers real-world challenges, backend integration, password security, and key full-stack development lessons for beginners.

This is the first in a five-part series detailing my experience implementing advanced data engineering solutions with Databricks on Google Cloud Platform. The series covers schema evolution, incremental loading, and orchestration of a robust ELT pipeline.

Discover the 7 major stages of the data engineering lifecycle, from data collection to storage and analysis. Learn the key processes, tools, and best practices that ensure a seamless and efficient data flow, supporting scalable and reliable data systems.

This blog is troubleshooting adventure which navigates networking quirks, uncovers why cluster couldn’t reach PyPI, and find the real fix—without starting from scratch.

Explore query scanning can be optimized from 9.78 MB down to just 3.95 MB using table partitioning. And how to use partitioning, how to decide the right strategy, and the impact it can have on performance and costs.

Dive deeper into query design, optimization techniques, and practical takeaways for BigQuery users.

Wondering when to use a stored procedure vs. a function in SQL? This blog simplifies the differences and helps you choose the right tool for efficient database management and optimized queries.

Discover how BigQuery Omni and BigLake break down data silos, enabling seamless multi-cloud analytics and cost-efficient insights without data movement.

In this article we'll build a motivation towards learning computer vision by solving a real world problem by hand along with assistance with chatGPT

This blog explains how Apache Airflow orchestrates tasks like a conductor leading an orchestra, ensuring smooth and efficient workflow management. Using a fun Romeo and Juliet analogy, it shows how Airflow handles timing, dependencies, and errors.

The blog underscores how snapshots and Point-in-Time Restore (PITR) are essential for data protection, offering a universal, cost-effective solution with applications in disaster recovery, testing, and compliance.

The blog contains the journey of ChatGPT, and what are the limitations of ChatGPT, due to which Langchain came into the picture to overcome the limitations and help us to create applications that can solve our real-time queries

This blog simplifies the complex world of data management by exploring two pivotal concepts: Data Lakes and Data Warehouses.

demystifying the concepts of IaaS, PaaS, and SaaS with Microsoft Azure examples

Discover how Azure Data Factory serves as the ultimate tool for data professionals, simplifying and automating data processes

Revolutionizing e-commerce with Azure Cosmos DB, enhancing data management, personalizing recommendations, real-time responsiveness, and gaining valuable insights.

Highlights the benefits and applications of various NoSQL database types, illustrating how they have revolutionized data management for modern businesses.

This blog delves into the capabilities of Calendar Events Automation using App Script.

Dive into the fundamental concepts and phases of ETL, learning how to extract valuable data, transform it into actionable insights, and load it seamlessly into your systems.

An easy to follow guide prepared based on our experience with upskilling thousands of learners in Data Literacy

Teaching a Robot to Recognize Pastries with Neural Networks and artificial intelligence (AI)

Streamlining Storage Management for E-commerce Business by exploring Flat vs. Hierarchical Systems

Figuring out how Cloud help reduce the Total Cost of Ownership of the IT infrastructure

Understand the circumstances which force organizations to start thinking about migration their business to cloud